Table of Contents

Multilevel Linear Model

Introduction

Linear Models and Generalized Linear Models (GLM) are a very useful tool to understand the effects of the factor you want to examine. These models are also used for prediction: Predicting the possible outcome if you have new values on your independent variables (and this is why independent variables are also called predictors).

Although these models are powerful for analyzing the data gained from HCI experiments, one concern we have is that they do not carefully handle “repeated-measure”-ness (e.g., individual differences of the participants). Multilevel models can accommodate such differences. Very roughly speaking, it is a repeated-measure version of linear models or GLMs.

A multilevel model is often referred as a “hierarchical,” “random-effect” or “mixed-effect” model. The term of “random effects” is often confusing because it is used to mean different things. In this wiki, I follow Data Analysis Using Regression and Multilevel/Hierarchical Models by Andrew Gelman and Jennifer Hill. I explain what “random effects” and “fixed effects” (opposite to random effects) mean in this page; however, people say different opinions about them (as Gelman and Hill's book explains). So, I won't go into detailed discussions about how we should consider these factors.

High-level Understanding

Before jumping into examples of multilevel linear models, let's have a high-level understanding of multilevel linear models. Let's think about a very simple experiment: Comparing two techniques: Technique A and Technique B. Your measurement is performance time. In your experiment, 10 participants performed some tasks with both techniques; thus, the experiment is a within-subject design. If you do not consider the “Participant” factor, you will do a linear regression analysis where your independent variable is Technique, and if its coefficient is non-zero, you will argue that the difference of the techniques caused some differences in the performance time.

However, this analysis does not fully consider the experiment design you had: the differences between the participants. For example, some participants are more comfortable with using computers than the others, and thus, their overall performance might have been better. Or the differences of the techniques might have caused different levels of the effects depending on the participants. Some participants had similar performance with both techniques, and some had much better performance with one technique. The linear regression above tries to represent the data with one line, and unfortunately it aggressively aggregates such differences which may matter to your results in this case.

Multilevel regression, intuitively, allows us to have a model for each group represented in the within-subject factors. Thus, in this example, instead of having one linear model, you will build 10 linear models, each of which is for each participant, and do analysis on whether the techniques caused differences or not. In this way, we can also consider individual differences of the participants (they will be described as differences of the models). What multilevel regression actually does is something like between completely ignoring the within-subject factors (sticking with one model) and building a separate model for every single group (making n separate models for n participants). But I think this exaggerated explanation well describes how multilevel regression is different from simple regression, and is easy to understand.

Varying-intercept vs. varying-slope

The previous section gave you a rough idea of what multilevel models are like. For the factors in which we want to take individual differences into account, we treat them as random effects and build each model for each level of these factors. But one question is still remaining. How do we make “different models”?

If we build a separate model for each participant, for example, analysis would be very time-consuming. With the example we used above, we would have 10 models in total. Some may have significant effects of Technique, and some may not. In that case, how can we generalize the results and say if Technique is really a significant factor?

Multilevel models can remove this trouble. Instead of building completely different models, multilevel regression changes the coefficients of only some parameters in the model for each level of random effects. Thus, the coefficients of the other factors remain the same, and model analysis becomes much easier.

Roughly speaking, there are two strategies you can take for random effects: varying-intercept or varying-slope (or do both). Varying-intercept means differences in random effects are described as differences in intercepts. For example, in the previous example, we will have 10 different intercepts (each for each participant), but the coefficient for Technique is constant. Varying-slope means vice versa: changing the coefficients of some factors.

In many cases, factors, more precisely independent variables or predictors, are something you want to examine. Thus, you want to generalize results for them. And the intercept is usually something you don't include in your analysis, so it can be very complicated. Therefore, unless you have some clear reasons, varying-intercept models will work for you. They won't be computationally complicated and their results will be straightforward to interpret. In this page, I show an example of varying-intercept models.

Fixed effects vs. random effects

Although I won't go into detailed discussions about the difference between “random effects” and “fixed effects” (the opposition to random effects), it is important to have a high-level understanding of their differences. Then you won't get confused when you read other literature or try to use other statistical software.

This is my interpretation of differences between fixed and random effects: In multilevel regression, you will build multiple models. The coefficients of the fixed effects are constant or “fixed” across the models. In contrast, the coefficients of the random effects can be different, or (more or less) can be “random”. Random effects can be factors whose effects you are not interested in but whose variances you want to remove from your model. “Participants” are a good example of random effects. Generally, we are not interested in how different the performance of each participant is. But we do not want to let the individual differences of the participants affect the analysis.

If you know a better way to understand the difference between fixed effects and random effects, please share it with us! :)

R example

I prepare hypothetical data to try out multilevel linear regression. You can download it from here. We are going to use that file in the following example.

Let me explain a hypothetical context of this hypothetical data :). We conducted an experiment with a touch-screen desktop computer. Our objective is to examine how mouse-based and touch-based interactions affect performance time in different applications. In this system, participants could use either mouse click or direct touch to select an object or an item in a menu. They could also use a mouse wheel or a pinch gesture to zoom in/out the screen. We just let them which way to interact with the system so that we could measure how people tend to use mouse-based and touch-based interactions. Our design is also within-subject across the three applications tested in this experiment.

The file contains the results of this experiment. I think most of the columns are just guessable. Time is the time (sec) for completing the task in each application (indicated by Application). MouseClick, Touch, MouseWheel, and PinchZoom are the counts for mouse clicks, direct touch, zoom with the mouse wheel, and zoom with the pinch gesture.

Now we want to examine how these numbers of MouseClick, Touch, MouseWheel, and PinchZoom affect performance time. Of course, there are a number of models we can think of, but let's try something simple:

;#; ## Time = intercept + a * Application + b * MouseClick + c * Touch + d * MouseWheel + e * PinchZoom. ## ;#;

However, we want to take the effects of our experimental design into account. To do this, we make a tweak on the model above.

;#; ## Time = intercept + a * Application + b * MouseClick + c * Touch + d * MouseWheel + e * PinchZoom + Random(1|Participant). ## ;#;

What Random(1|Participant) is trying to mean is that we are going to change the intercept for each participant. Yes, we are making varying-intercept models. We assume that individual differences by participants can be explained by differences in the intercept. In this manner, we can remove some effects caused by the individual differences to the other factors.

There are a number of ways to do multilevel linear regression in R, but we are using the lme package. We also import the data.

Now we are building the model.

Again, (1|Participant) is the part for the random effect. “1” means the intercept. So this means we are changing the intercept for each participant. To find the models, we use the restricted maximum likelihood (REML). I won't go into the details of REML here, but in most cases, you can simply use REML. But if you have specific reasons, you can use the maximum likelihood method by doing REML=F.

Now let's take a look at the results.

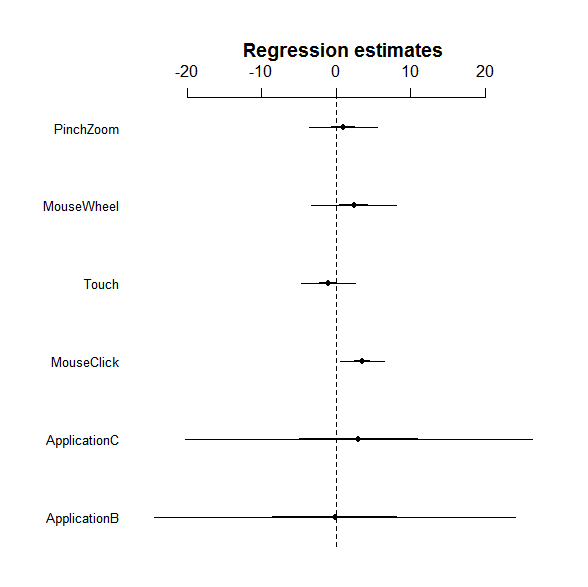

So you can see the estimated coefficient for each factor, but it is kinda unclear whether it is really significant or not. Let's try coefplot. Unfortunately coefplot in the arm package does not work with the lme object. We thus use a fixed version of coefplot.

A thick and thin line represent the 1SD and 2SD ranges. So it looks like that MouseClick has a significant effect because its 2SD does not overlap the zero.

MCMC

So far, so good. We successfully created a model and looks like we have something interesting there. But we are not quite sure about which fixed effects are significant yet.

If you have read the multiple linear regression page, you may think we can do an ANOVA test. Technically yes, we can do an ANOVA test. However, it is not quite straightforward to run it because of random effects. In this case, we cannot really be sure about whether the test statistic is F-distributed. There have been several attempts to address this and make an ANOVA test useful for multilevel regression, such as the Kenward-Roger correction. However, it is disputable if this correction is good enough so that we can assume the corrected test statistic is F-distributed. Thus, ANOVA with the Kenward-Roger correction has not been implemented yet in R (as of June 2011).

Instead, we use the Markov Chain Monte Carlo (MCMC) method to estimate the coefficient and highest probability density credible intervals (HPD credible intervals). Here, I will skip detailed discussions of what MCMC does and what HPD credible intervals mean. (I will do it sometime later at a separate page). For now, let's simply think that MCMC tries to re-estimate the coefficient for each factor based on the results we got with lmer() so that we can have better estimation.

We are going to use the languageR package to run MCMC. pvals.fnc() can take a bit of time for calculation.

The parameter nsim is the number of the simulation to run. Here, I set 10000, but you may need to tweak it to make sure that the estimation is converged.

MCMCmean is the re-estimated coefficient by MCMC. As you can see in this example, you may see relatively large differences between the estimation by REML and the one by MCMC. As far as I understand, the estimation by MCMC is more reliable than the one by REML. So we are going to use the results by MCMC.

As you can see in the results, only MouseClick has a significant positive effect on increasing performance time. So the results imply that reducing the number of mouse clicks may decrease the overall task completion time in the applications tested here. PinchZoom's effect (0.1278, 95% HPD CI:-5.1017,5.276) is smaller than MouseWheel (2.7309, 95% HPD CI:-3.8874, 9.867). Thus, encouraging users to do pinching gestures for zoom operations might contribute to decrease in the overall task completion time.

Unfortunately, there aren't many things to say from the results here, but I guess you have gotten the idea of how you interpret the results of multi-level linear models.

Multicollinearlity

Lastly, let's make sure that we don't have multicollinearity problems. For lmer(), we cannot use the vif()function. Instead, we can use the function provided by Austin F. Frank (https://hlplab.wordpress.com/2011/02/24/diagnosing-collinearity-in-lme4/).

Copy the part of the vif.mer() function (or just copy all) in https://raw.github.com/aufrank/R-hacks/master/mer-utils.R, and paste them into your R console. And just use the vif.mer() function.

So, in this example, we are fine. If the value is higher than 5, you probably should remove that factor, and if it is higher than 2.5, you should consider removing it.

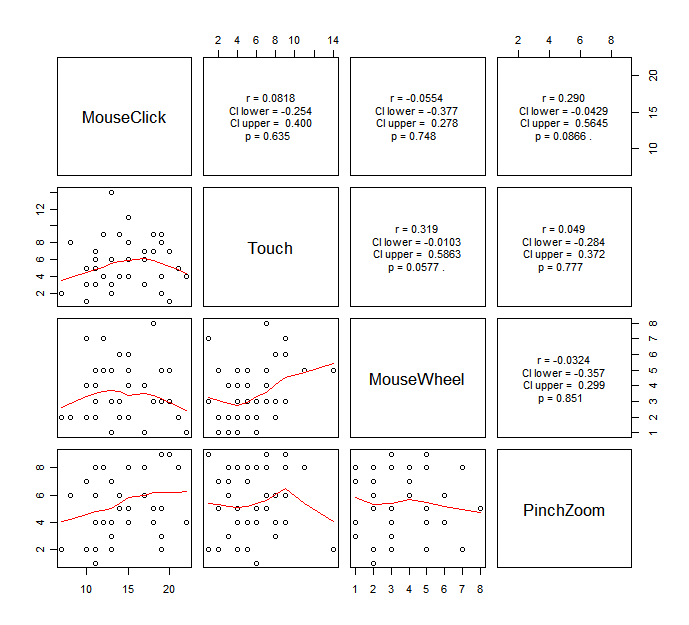

Another way to examine multicollinearlity is to look at the correlation of the two of the independent variables. I wrote a code to do this quickly.

Copy the code above, and paste it into your R console. Then, run the following code.

You will get the following graph.

It is kinda hard to say which variable we should consider to remove from this, but I would say if you see any significant strong correlation, you probably should be careful about if it may cause multicollinearity.

How to report

Once you are done with your analysis, you will have to report the following things:

- Your model,

- How you chose the model (either you just defined, you used AIC, or you used CV),

- R-squared and adjusted R-squared (lmer() does not provide them, and I am looking into how to get them).

- How you tested the estimated coefficients (either you did MCMC or ran a F-test with the Kenward-Roger correction).

- Estimated value for each coefficient, and

- Significant effects with p value (if any).

For the last two items, you can say something like “MouseClick showed a significant effect (p<0.05). Its estimated coefficient with the MCMC method was 4.05 (95% HPD credible interval = [0.75, 7.63]).”

It is probably acceptable that you simply report the direction of each significant effect (positive or negative) if you do not really care about the actual value of the coefficient. But I think you should report at least whether each significant effect contributes to the dependent variable in a positive or negative way.

Discussion

Anticipating Freud and Jung, the Symbolists mined mythology and dream imagery for a visual language of the soul. More a philosophical approach than an actual style of art, they influenced their contemporaries in the Art Nouveau movement and Les Nabis.

for More information <a href=http://canvas-online.com/>click here</a>

- Comprehend more at <a href=http://www.fujianxiangzhu.com>Art Website</a>

<a href="http://kamagradxt.com/">kamagra oral jelly available in india</a>

kamagra oral jelly wirkung frauen

<a href="http://kamagradxt.com/">kamagra chewable 100 mg reviews</a>

super kamagra kaufen

http://kamagradxt.com/

kamagra forum srpski

<a href="http://kamagradxt.com/">kamagra oral jelly review forum</a>

kamagra oral jelly viagra

<a href="http://kamagradxt.com/">buy kamagra 100 mg</a>

kamagra oral jelly buy online

http://kamagradxt.com/

india kamagra 100mg chewable tablets

10%-15% Daily Profit! Earn your bitcoins today, become a millionere tomorrow!

Affiliate program and referral commision 5%. Invite a freind and earn 5% commision from each deposit!

<a href="https://bit.ly/2uCcLt2">CLICK HERE!</a>

===============================

Инвестиции в криптовалюту - https://bit.ly/2uCcLt2!

10%-15% ежедневного дохода! Заработайте свои биткойны сегодня, станьте миллионером завтра!

Партнерская програма и реферальная коммиссия 5%. Пригласите друга и получите 5% с каждого депозита!

<a href="https://bit.ly/2uCcLt2">ПЕРЕЙТИ НА САЙТ!</a>

Download: http://sewerrepairpro.com/showdown-1963-audie-murphy-western-brrip-xvid-wujxf.html - Showdown (1963) - Audie Murphy - Western - BRRip XviD 11 minutes .

BBC.The.Life.of.Mammals.8of9.Life.in.the.Trees.720p.HDTV.x264.AAC .mkv

Download: http://cgunitedsc.com/serie/Klein-Dorrit - Klein Dorrit .

BitLord.com

Download: http://jumpstart-pros.com/serie/2057-Unser-Leben-in-der-Zukunft - 2057 – Unser Leben in der Zukunft .

Dance Moms S06E32 Two Teams Two Studios Part One HDTV x264-[NY2 - - [SRIGGA -

Download: http://cookingyoursassoff.com/serie.php?serie=1286 - The White Queen .

Advantageous

Download: http://infobawel.com/serie/Aria-The-Animation - Aria The Animation .

Love Everlasting 2016 HDRip AC3 2 0 x264-BDP[SN -

Download: http://cabuaan.com/serie/Ordinary-Lies - Ordinary Lies .

Aleksandr Marshal I Vetheslav Bukov - Gde Nothyet Solnce

Download: http://infobawel.com/serie/Retribution - Retribution .

Трите лица на Ана Епизод 9 / Tres veces Ana

Download: http://sarakarinoukri.com/movie/genesis-the-fall-of-eden-6pmw.html - Genesis: The Fall of Eden (2018) .

Miles Davis - Tutu [Isohunt.to -

Download: http://jumpstart-pros.com/serie/serie/Two-and-a-Half-Men/1/7-Ich-verstehe/OpenLoad - OpenLoad .

Celebrity Antiques Road Trip S06E01 - Jennifer Saunders and Patricia Potter

Download: http://cookingyoursassoff.com/serie.php?serie=1330 - Low Winter Sun .

Teenies Auf Der Sex Akademie avi

Download: http://vettriselvan.com/capitulo.php?serie=4277&temp=1&cap=04 - ZombieLars (2017) 1X04 - Capitulo 04 .

The Lobster2016

Download: http://jumpstart-pros.com/serie/Tamako-Market - Tamako Market .

2012 the movie

Download: http://mittlivsomtransa.com/engine/go.php?file2=Watch Deep State Season 1 Episode 5 Movie Free Full Movie Online Free&lp=5 - WATCH THIS LINK .

the purge election year 720p

Download: http://cookingyoursassoff.com/serie.php?serie=1863 - ANZAC Girls .

[avi - The.BFG.2016.DVDRip.XviD.AC3-iFT[PR...

Download: http://infobawel.com/serie/El-Chapo - El Chapo .

May 2016 (3973)

Download: http://comprarlevitraonline.com/movie/veronica-dvd-streaming/5/ - OK.ru VF Dvdrip .

True Blood

Download: http://duendeimagery.com/serie/Potemayo - Potemayo .

Pain Killer By Little Big Town

Download: http://bad-txt-sec-test1.com/movie/downsizing-6d7o.html - Короче .

Greg Kinnear,

Download: http://keneganprobate.com/ver/the-pilot-2x05.html - 2x05 La Piloto .

Little Britain

Download: http://cabuaan.com/serie/Monster-Strike - Monster Strike .

Dont Breathe 2016 720p BRRip x264 AAC-ETRG

Download: http://infobawel.com/vorgeschlagene-serien/vote:12882 - Hard Rock Medical .

A History of Portugal and the Portuguese Empire: From Beginnings to 1807 (Volume 1)

Download: http://cgunitedsc.com/vorgeschlagene-serien/vote:11527 - Invasion von der wega .

TheUpperFloor 16 11 15 Karlee Grey And Penny Pax XXX SD MP4-RARBG

Download: http://merchantscirclellc.com/ver/vice-6x04.html - 6x04 VICE .

Mkv Torrents

Download: http://vettriselvan.com/serie.php?serie=2111 - Toda la Verdad sobre la Comida .

THE WALKING DEAD

Download: http://tl-hardware.com/serie/Pinocchio - Pinocchio .

Drunk History S04E08 720p HDTV x264-FLEET[PRiME -

Download: http://cabuaan.com/serie/D-C-Da-Capo - D.C.: Da Capo .

Pakistani Movies

Download: http://prsti.com/category/black-panther-2018/ - Black Panther (2018) .

??? BJ?? ?? (????TV,??????,???,??,????,??TV,??TV,????,????,BJ??,BJ,??,2??,3??,?????,?????TV)

Download: http://manishakumari.com/serie/This-Is-Us - This Is Us .

1 de outubro de 2015

Download: http://manishakumari.com/serie/Nabari-no-Ou - Nabari no Ou .

[share_ebook - OpenVPN: Building and Integrating Virtual Private Networks: Learn how to build secure VPNs using this powerful Open Source application

Download: http://cabuaan.com/serie/Eureka - Eureka - Die geheime Stadt .

Nocturna 2015 BDRip XviD AC3 EVO

Download: http://infobawel.com/serie/Workaholics - Workaholics .

Internet Download Manager (IDM) 6 26 Build 10 Multilingual + Patch (Safe) [Sa...

Download: http://flasheur.fr/movie/gun-crazy-19790/ - Gun Crazy .

Finding Dory

Download: http://krbzbwimbokrp.com/vorgeschlagene-serien/vote:12844 - Agatha Christie – Kleine Morde .

Arrow S05E07 HDTV x264-LOL[ettv -

Download: http://manishakumari.com/vorgeschlagene-serien/vote:12341 - Leah Remini: Ein Leben nach Scientology .

In Absentia (2000)

Download: http://krbzbwimbokrp.com/vorgeschlagene-serien/vote:12937 - Bomb Girls .

[Nipponsei - Angel beats! Original Soundtrack.zip

Download: http://houseexpressre.com/data-mining-the-deceased-2016-720p-amzn-webrip-aac2-0-x264-ntg-wujqk.html - Data Mining the Deceased 2016 720p AMZN WEBRip AAC2 0 x264-NTG 25 minutes .

Нюанси синьо

Download: http://krbzbwimbokrp.com/serie/Baggage-Battles-Die-Koffer-Jaeger - Baggage Battles – Die Koffer-Jäger .

Titanic 1996 HD ????? ????? 5.9

Download: http://infobawel.com/serie/Double-J - Double-J .

161117.??TV.?????.E34.1080p.HDTV.HDMI-Yoonyule.ts

Download: http://sewerrepairpro.com/исчезновение-СЃРёРґРЅРё-холла-the-vanishing-of-sidney-hall-2018-hdrip-wujom.html - Рсчезновение РЎРёРґРЅРё Холла / The Vanishing of Sidney Hall (2018) HDRip РѕС‚ Scarabey | iTunes 17 minutes .

?? ??

Download: http://merchantscirclellc.com/ver/dragonball-super-2015-1x49.html - 1x49 Dragon Ball Super .

Compton By Dr. Dre

Download: http://longislandmvp.com/genero/novelas/ - Contacto .

More results from onlinemovies.is

Download: http://cabuaan.com/vorgeschlagene-serien/vote:12957 - L.A. Law .

PortuguA?s (Brazil)

Download: http://cookingyoursassoff.com/capitulo.php?serie=2422&temp=2&cap=04 - Humans 2X04 - Capitulo 04 .

norm of the north 720p

Download: http://jumpstart-pros.com/serie/1993-Jede-Revolution-hat-ihren-Preis - 1993 - Jede Revolution hat ihren Preis .

BLACKED 16 11 16 Brandi Love XXX 1080p MP4-KTR[rarbg -

Download: http://dynaforceproducts.com/serie/Ore-tachi-ni-Tsubasa-wa-Nai - Ore-tachi ni Tsubasa wa Nai .

Renoir RELOADED

Download: http://infobawel.com/serie/Alles-ausser-Mord - Alles auГџer Mord! .

Zoom Legendado Online Esta e a historia de tres artistas: Edward (Gael Garcia Bernal), vaidoso diretor de cinema que precisa refilmar o final de um longa contra sua vontade e de repente comeca a ter problemas sexuais. Michelle (Mariana Ximenes), modelo brasileira que deixa namorado e carreira nos Estados Unidos para voltar ao seu pais e escrever um livro. E Emma (Alison Pill), ...

Download: http://cookingyoursassoff.com/serie.php?serie=1780 - YouВґre the Worst .

Aleksandr Zvincov 20 Luchshih Pesen 2008

Download: http://shsh-1.com/all/feelandjohnnynorbergonedreamtm058web2016ukhx-t3407815.html - Feel_And_Johnny_Norberg-One_Dream-(TM058)-WEB-2016-UKHx .

Jason Bourne 2016 1080p BluRay x264-SPARKS[rarbg -

Download: http://jumpstart-pros.com/serie/Weisst-du-eigentlich-wie-lieb-ich-dich-hab - WeiГџt du eigentlich, wie lieb ich dich hab? .

suicide squad 2016 extended 480p web dl x264 rmteam mkv

Download: http://infobawel.com/vorgeschlagene-serien/vote:13435 - Die Viersteins .

No Rest For The Wicked Diskord Re Twerk Lykke Li

Download: http://bad-txt-sec-test1.com/roger-daltrey-as-long-as-i-have-you-2018-mp3-РѕС‚-vanila-wujiu.html - Roger Daltrey - As Long As I Have You (2018) MP3 РѕС‚ Vanila 11 minutes .

Warcraft: The Beginning...

Download: http://keneganprobate.com/serie/shooter/temporada-0.html - Temporada 0 .

Madam Secretary

Download: http://tl-hardware.com/vorgeschlagene-serien/vote:13136 - Die Verschwörer - Im Namen der Gerechtigkeit .

Nashville.1x14.[Reparado - .HDTV.XviD.[www.DivxTotaL.com - .avi

Download: http://jumpstart-pros.com/serie/Into-the-Badlands - Into the Badlands .

Сезон 2

Download: http://cabuaan.com/serie/Flip-Flappers - Flip Flappers .

Adobe Photoshop CS6 13.0.1 Final Multilanguage (cracked dll)

Download: http://cookingyoursassoff.com/serie.php?serie=4395 - Supermax .

0 Comments

Download: http://daqian999.com/brooklyn-nine-nine-saison-5-french-hdtv.html - Brooklyn Nine-Nine Saison 5 FRENCH HDTV .

Kindle Books Collection

Download: http://krbzbwimbokrp.com/serie/Undateable - Undateable .

[email protected -

Download: http://infobawel.com/serie/serie/Die-Simpsons/1/6-Lisa-blaest-Truebsal/OpenLoad - OpenLoad .

Skylanders Academy S01E04 720p x264 [StB - Others

Download: http://cgunitedsc.com/serie/Haus-des-Geldes - Haus des Geldes .

Cuando Un Hombre Pierde Sus Ilusiones

Download: http://xn--amzon-hra.com/the-box-2007-watch-online/ - The Box (2007) .

High Strung 2016 LiMiTED BRRip XviD AC3-iFT[PRiME -

Download: http://cabuaan.com/serie/Lipstick-Jungle - Lipstick Jungle .

Forza Horizon 3 (2016) (Final Version) (for pc)

Download: http://infobawel.com/vorgeschlagene-serien/vote:9768 - Epitafios - Tod ist die Antwort .

OnlineTV v.12.16.6.14 Full Crack 2016 Channel US FR TUK IT ESP AR Decrypt 800 channel HD multi lang instal - 2016-11-17

Download: http://jumpstart-pros.com/serie/Die-Dinos - Die Dinos .

Bryce Dallas Howard

Download: http://shsh-1.com/all/forza-horizon-3-2016-pc-multi-t3389214.html - Forza Horizon 3 (2016) (PC) (Multi) .

Alasdair Fraser & Natalie Haas - In The Moment

Download: http://jumpstart-pros.com/serie/CopStories - CopStories .

Agre G Isso E Kuduro 2010

Download: http://manishakumari.com/serie/Sacred-Seven - Sacred Seven .

Windows 7 8 1 10 X64 18in1 UEFI en-US Nov 2016 Generation2}

Download: http://sewerrepairpro.com/mov/family/ - Семейные .

Alan Jackson - Gone Country - 3gp

Download: http://tl-hardware.com/vorgeschlagene-serien/vote:13070 - Fast N’ Loud .

von_Gilgamesh

Download: http://cgunitedsc.com/serie/Kara-Ben-Nemsi-Effendi - Kara Ben Nemsi Effendi .

Young Rider - November - December 2016

Download: http://jumpstart-pros.com/serie/Juuou-Mujin-no-Fafnir - Juuou Mujin no Fafnir .

Nine to Five 2013 REMASTERED 1980 BDRip x264-VoMiT[1337x - [SN -

Download: http://cabuaan.com/vorgeschlagene-serien/vote:13371 - Die Geiseln .

Aleksandr Pushkin - Sbornik Proizvedenii

Download: http://cookingyoursassoff.com/serie.php?serie=3769 - The Dawns Here Are Quiet .

Mac OS X Mountain Lion 10.8.5 12F37 (Intel)

Download: http://cookingyoursassoff.com/serie.php?serie=4390 - Grupo 2: Homicidios .

The Big Bang Theory S10E06 HDTV x264 LOL[ettv -

Download: http://cabuaan.com/serie/serie/The-Walking-Dead/1/4-Vatos/OpenLoad - OpenLoad .

Expeditions Viking [2016 MAC OS GAME -

Download: http://krbzbwimbokrp.com/serie/Grandfathered - Grandfathered .

Force 2 (2016) Full Movie DVDrip HD Free Download

Download: http://duendeimagery.com/serie/Catweazle - Catweazle .

VA - The Jazz House Independent 2nd Issue (1998) [FLAC - [Isohunt.to -

Download: http://cookingyoursassoff.com/serie.php?serie=1774 - Naomi y Wynonna .

Read More

Download: http://krbzbwimbokrp.com/serie/Spiel-des-Lebens - Spiel des Lebens .

SMD03 - Saki Ootsuka (S Model Vol 3) XXX uncen WebGift

Download: http://cookingyoursassoff.com/serie.php?serie=3195 - The Ranch .

Standoff

Download: http://merchantscirclellc.com/ver/gurazeni-1x03.html - 1x03 Gurazeni .

On The Lot

Download: http://krbzbwimbokrp.com/serie/Gekkan-Shoujo-Nozaki-kun - Gekkan Shoujo Nozaki-kun .

Doctor Strange (2016) Score YG [Isohunt.to -

Download: http://krbzbwimbokrp.com/serie/Matantei-Loki-Ragnarok - Matantei Loki Ragnarok .

Chance S01E06 720p WEB H264-DEFLATE[ettv -

Download: http://cabuaan.com/serie/serie/The-Walking-Dead/1/3-Tag-der-Froesche/Streamango - Streamango .

PlumperPass 16 11 16 Kendra Kox Double The Pleasure XXX SD MP4-RARBG

Download: http://cgunitedsc.com/serie/Ballroom-e-Youkoso - Ballroom e Youkoso .

Alan Price - Time & Tide

Download: http://manishakumari.com/serie/serie/How-I-Met-Your-Mother/1/13-Traum-und-Wirklichkeit/OpenLoadHD - OpenLoadHD .

Impastor S02E08 iNTERNAL HDTV x264-ALTEREGO[PRiME -

Download: http://cabuaan.com/serie/Disney-S3-Stark-schnell-schlau - Disney S3 – Stark, schnell, schlau .

Faketaxi

Download: http://cabuaan.com/serie/JK-Meshi - JK Meshi! .

adult movies

Download: http://lightmantherightman.com/search/Lucia Ocone.html - Lucia Ocone .

angry birds

Download: http://flasheur.fr/movie/addiction-voyeurism-19325/ - Addiction Voyeurism .

EASEUS Partition Master 11 9 All Edition rar

Download: http://cgunitedsc.com/serie/Grimmsberg - Grimmsberg .

MasterChef (UK) The Professionals S09E05

Download: http://infobawel.com/serie/Happiness - Happiness! .

Doom Metal

Download: http://manishakumari.com/serie/Fate-Extra-Last-Encore - Fate/Extra Last Encore .

CD Marcos & Belutti – Acustico ( Ao Vivo 2014 )

Download: http://krbzbwimbokrp.com/serie/Ben-10-2016 - Ben 10 (2016) .

Cadence Lux - Babysitters Taking On Black Cock 4 rq mp4

Download: http://jumpstart-pros.com/serie/Puella-Magi-Madoka-Magica - Puella Magi Madoka Magica .

American Truck Simulator PC GAME [2016 - REPACK

Download: http://jumpstart-pros.com/serie/Saki-Achiga-hen-Episode-of-Side-A - Saki: Achiga-hen - Episode of Side-A .

2000 Ans D'Histoire - Janvier 2011(A)

Download: http://duendeimagery.com/serie/Hand-of-God - Hand of God .

CyberGhost_6 0 3 2124 + Premium serial

Download: http://cabuaan.com/vorgeschlagene-serien/vote:12360 - The Devils Ride - Ein Leben auf zwei Rädern .

Structural Steel Design: LRFD Approach, 2nd edition

Download: http://vettriselvan.com/serie.php?serie=2442 - Complications .

Blood for Dracula 1974 HD ????? ????? 6.2

Download: http://infobawel.com/serie/The-Man-in-the-High-Castle - The Man in the High Castle .

Handheld

Download: http://cookingyoursassoff.com/serie.php?serie=3956 - The Quad .

z nation

Download: http://cookingyoursassoff.com/serie.php?serie=4709 - A.I.C.O. Incarnation .

Stone Sour Do Me A Favor Official Video

Download: http://vettriselvan.com/serie.php?serie=4421 - SEAL Team .

American Horror Story Cast – Criminal (from American Horror Story) [fe...

Download: http://jumpstart-pros.com/serie/Gunpowder - Gunpowder .

The Flash S1E21 'Grodd Lives' Trailer

Download: http://supermarketdm.com/watch/RGbWAYdY-paper-year.html - Paper Year .

Arrow S05E07 720p HDTV X264-DIMENSION

Download: http://cookingyoursassoff.com/serie.php?serie=3928 - Desmontando la Historia .

American Dad S13E02 XviD-AFG TV Shows

Download: http://cgunitedsc.com/serie/Innocent-Venus - Innocent Venus .

TheUpperFloor 16 11 15 Karlee Grey And Penny Pax XXX 720p MP4-KTR[rarbg -

Download: http://krbzbwimbokrp.com/serie/Hercules - Hercules .

UltimateZip 9 0 1 51 + Keygen Keys [4realtorrentz - zip

Download: http://puremusicstudio.com/torrent/12622171/football-manager-2018-mac-os-game-full.html - Football Manager 2018 MAC OS GAME (full) 2017 .

BitLord.com

Download: http://cpp-good.com/films/acteur/mark-wahlberg.html - Mark Wahlberg .

Ben and Lauren: Happily Ever After Season 1 Episode 5 - Happily Ever After 1×05

Download: http://flowerdeliveriesnow.com/watch-online-healthy-hour-episode-22-104152.html - Healthy Hour - еЃҐеє·ж—ҐиЁ - Episode 22 .

BitLord.com

Download: http://vicieux.fr/Fran-ois-Marsal-Encyclop-die-de-banque-et-de-bourse_3642961.html - François-Marsal - Encyclopédie de banque et de bourse .

Aleksandr Miraj Raznii Sudiby 2008

Download: http://trn1023.com/movie/wheres-my-baby-25430.html - HD Where's My Baby? .

@file gomorra

Download: http://testdomainreg-08244.com/film/The-Suicide-Shop_hZk - The Suicide Shop .

Alabama - Just Us - 1987 - 192 CBR

Download: http://doigiaycu.com/watch-movie-national-security-online-megashare-2-2012 - National Security (2003) .

SexArt - Perfect Awakening - Dolly Diore [720p - mp4

Download: http://chaobo99.com/descargar-torrents-variados-1949-Rammstein-In-Amerika-Concert-In-Madison-Square-Garden.html - Rammstein - In Amerika (Concert In Madison Square Garden). .

Sonic The Hedgehog Classics Android

Download: http://doigiaycu.com/watch-movie-escape-plan-2-hades-online-megashare-9332-430843-estream - Watch on estream .

(2016) TS Storks

Download: http://pc-tools.fr/mp3/codomo-dragon.html - CODOMO DRAGON .

vlc 2 2 5-20160711-0754 beta x64

Download: http://dogux.com/02190817-aftenposten-junior-15-mai-2018/ - Aftenposten Junior – 15 mai 2018 .

Norman Malware Cleaner v2.07.06 DC 24.05.2013 ( Ryushare - Uploaded )

Download: http://mapevaders.com/mov/animation/?lc=ru - Р СѓСЃСЃРєРёР№ .

Star Trek: Renegades 2015 HD ????? ????? 4.9

Download: http://mortalarcade.com/chapter/tomochan_wa_onnanoko/chapter_146 - Chapter 146 .

TeasePOV 16 11 04 Candice Dare XXX 720p MP4 KTR

Download: http://litvibe.com/manga/one-piece/649 - One Piece 649 .

Hell or High Water Torrent download

Download: http://bddinkal.com/mp3/a-chord-hsieh.html - A CHORD HSIEH .

http://extraimago.com/image/jCp

Download: http://charterorangebeach.com/song/anji-luwuk-lebih-indah.html - ANJI - Luwuk Lebih Indah .

Blood Punch

Download: http://ottqrxivzzol.com/sportovni.php - SportovnГ .

San Andreas

Download: http://siennaryan.com/search/?search=powerless%20s01e09%20720p - powerless s01e09 720p .

The.Big.Bang.Theory.S10E06.Web-DL.1080p.10bit.5.1.x265.HEVC-Qman[UTR - .mkv

Download: http://athuexere.com/show/exo-first-box/ - EXO First Box .

view all

Download: http://zaeinab.com/series-hd/ - Series HD .

History Of War Issue 3 - May 2014 UK

Download: http://athuexere.com/show/running-man/episode-58.html - Running Man Episode 58 .

0 Comments

Download: http://exprisoner.com/tag/jeremy-kagan - Jeremy Kagan .

The Robert Cray Band ( 2014 ) - In My Soul ( Limited Edition )

Download: http://socriptomoedas.com/watch-online/a-frosty-affair - A Frosty Affair .

Masterminds

Download: http://xb1123.com/physics-workbook-for-dummies-e20627309.html - Physics Workbook For Dummies .

Releases

Download: http://cpp-good.com/films/acteur/sebastien-thiery.html - SГ©bastien Thiery .

APES.REVOLUTION.Il.pianeta.delle.scimmie.Movie.ITALIA.2014.BluRay-1080p.x264-YIFY

Download: http://hsq333.com/movie/high-jack-2018/ - High Jack (2018) Hindi Full Movie Watch Online Watch High JackВ (2018) Full Movie Online, High Jack (2018) Full Movie Free Download,В High Jack Hd Movie Watch Online :В Rakesh, an out of luck DJ, has just found out that the gig he was to perform in Goa, has been canceled. He is in urgent requirement of money to save his Dad's clinic and in that desperation, partly agrees to carry ... .

defonce de beaux petits culs 5 s1 mp4

Download: http://dns9227.com/film/fichefilm_gen_cfilm=14790.html - Mon voisin Totoro .

Kate Micucci

Download: http://250585g.com/videos/replay/864066/days-gone-un-monde-transforme-paysages-comme-habitants-e3-2018.htm - ReplayDays Gone : Un monde transformГ©, paysages comme habitants - E3 2018 .

The Makers of Scotland: Picts, Romans, Gaels and Vikings

Download: http://margatebookkeeping.com/chapter/tomochan_wa_onnanoko/chapter_345 - Chapter 345 .

Lethal Weapon S01E07 HDTV x264-LOL[ettv -

Download: http://nightowlstore.com/rubrique/31/livre/957/ma-pochette-de-danseuse - Ma pochette de danseuse .

Free Full Fantasy TV Shows

Download: http://travel-fleet.com/search/Jim Gaffigan.html - Jim Gaffigan .

K milked by Daniela 08 mp4

Download: http://train4entrepreneur.com/f/581604/nike_tracer_epl_by_darfidos_and_peyman.html - NIKE TRACER EPL by darfidos and Peyman .

Dark Souls II Scholar of The First Sin REPACK-KaOs

Download: http://mtvirtualit.com/tags/explore/plot/103578/0/0 - Escapades1332 .

[???? - ???? 1? 161117

Download: http://carsforusvets.com/DAD1FC9A9ED1E54548962F711FBD7395CCBB52A1/WWE-2K18-CODEX - WWE 2K18 CODEX .

Dragon Ball Super Arc Champa Part1 [VOSTFR - [720P - [...(N) (P)

Download: http://woolfestreet.com/showthread.php?264469-Veere-Di-Wedding-2018-Watch-Full-Hindi-Movie-Online-Pre-Dvd-Rip&s=91001e82fe7921da8ed66ddfcd523e59 - Veere Di Wedding | 2018 Watch Full Hindi Movie Online - Pre Dvd Rip .

[AnimeRG - FLIP FLAPPERS - 7 [720p - [ReleaseBitch -

Download: http://vicieux.fr/business.html - Business .

100 Uncensored Japanese HD Cumshots - part 2 HD 720p

Download: [url=http://sharpsushi.com/index.php?do=static&page=come-postare-un-articolo]Come creare un articolo[/url] .

Rachel Allen's: Easy Meals - Series 1

Download: [url=http://chaobo99.com/serie-episodio-descargar-torrent-52752-Rusia-Con-Simon-Reeve-1x01-al-1x03.html]Rusia Con Simon Reeve - 1ВЄ Temporada [1080p][/url] .

My Friends

Download: [url=http://woolfestreet.com/forumdisplay.php?1747-Satrangee-Sasural&s=f970a21e442858c28cb47b5bb7909a32]Satrangee Sasural[/url] .

Suicide Squad 2016 EXTENDED 1080p WEB-DL x264 AC3-JYK[SN]

Download: [url=http://gomeseventos.com/rage-french-dvdrip-2018.html]Rage FRENCH DVDRIP 2018[/url] .

Alesana - Confessions (2015)

Download: [url=http://justpositives.com/watch-everything-everything-online-free-putlocker-849.html]Everything, Everything (2017)[/url] .

Telerik Platform Ultimate Collection for .NET 2016.2 SP1 [OS4World]

Download: [url=http://charterorangebeach.com/song/james-arthur-at-my-weakest-lyric.html]James Arthur - At My Weakest (Lyric Video)[/url] .

Suicide Squad 2016 EXTENDED 1080p WEB-DL x264 AC3-JYK

Download: [url=http://pc-tools.fr/mp3/david-bowie.html]David Bowie[/url] .

Naruto Shippuden Completo MP4 Torrent

Download: [url=http://ottqrxivzzol.com/tema/v%C3%BDslech]vГЅslech[/url] .

Download Dej loaf and lil wayne for free

Download: [url=http://bddinkal.com/mp3/juno-mak--kay-tse.html]Juno Mak Kay Tse[/url] .

ReCore - pc [2016]REPACK

Download: [url=http://vitalpediafox.com/watch/835430-3-gol-terbaik-timnas-indonesia-u-22-pada-sea-games-2017]3 Gol Terbaik Timnas Indonesia U-22 pada SEA Games 2017[/url] .

Ghost Hunters

Download: [url=http://woolfestreet.com/forumdisplay.php?2439-Zun-Mureed&s=91001e82fe7921da8ed66ddfcd523e59]Zun Mureed[/url] .

Alvin and the Chipmunks The Road Chip (2015) CAM HD Free Download

Download: [url=http://xb1123.com/data-analysis-with-microsoft-excel-e25241812.html]Data Analysis with Microsoft Excel[/url] .

AmericanDaydreams.Ava.Addams.mp4s

Download: [url=http://charlottebodyrubs.com/series/marlon-saison-1-episode-10-15672.html]Marlon - S01E10[/url] .

Petes Dragon 2016 BDRip x264 AC3-iFT[PRiME]

Download: [url=http://monia.fr/watch86/Greys-Anatomy/season-14-episode-15-Old-Scars-Future-Hearts]Old Scars, Fut season 14 episode 15[/url] .

[email protected] @(MIDD-372)(MOODYZ) ?????? Beautiful female teacher ?????

Download: [url=http://athuexere.com/show/weekly-idol/episode-246.html]Weekly Idol Episode 246[/url] .

Adobe After Effect

Download: [url=http://monia.fr/watch69/The-Big-Bang-Theory-/season-06-episode-06-The-Extract-Obliteration]The Extract Ob season 06 episode 06[/url] .

???

Download: [url=http://litvibe.com/manga/hunter-x-hunter/101]Hunter X Hunter 101[/url] .

No Tomorrow S01E06 720p HDTV x264-FLEET[PRiME]

Download: [url=http://tapisdorient.fr/2015/02/]February 2015[/url] .

Lethal Weapon S01E03 HDTV x264-LOL[ettv]

Download: [url=http://mbc787.com/tema-bugatti-veyron/]Tema de Bugatti Veyron[/url] .

Alanis Morissette - Live At Montreux

Download: [url=http://zealoustools.com/voir-manga-choujigen-game-neptune-the-animation-en-streaming-6769.html]Choujigen Game Neptune The Animation[/url] .

Sonic Academy - KICK 2 v1.0.5 macOS [R2R][dada] [Isohunt.to]

Download: [url=http://cpp-good.com/ebooks/partition-musicale/]Partition musicale[/url] .

Alejandro Sanz - Paraiso Express - Teatro Compac Gran Via - Madr

Download: [url=http://tapisdorient.fr/2016/01/]January 2016[/url] .

Download Now The Big Bang Theory S10E09 HDTV x264 LOL 1 hour ago

Download: [url=http://selftrainedprogrammer.com/film?genre=Science Fiction]Science Fiction[/url] .

Addicted to

Download: [url=http://muzica-etno.com/movie-online/vikings-season-4]Vikings - Season 4[/url] .

Naruto Shippuden – Episodio 476 e 477 Legendado HD Online

Download: [url=http://vandyhacks.com/online-sorozatok/6560/seasons/1/episodes/122]ГЃlmodj velem 1.Г©vad 122.epizГіd[/url] .

Leave a Comment!

Download: [url=http://landingcraftsforsale.com/2018/04/29/%d9%85%d8%b4%d8%a7%d9%87%d8%af%d8%a9-%d9%81%d9%8a%d9%84%d9%85-%d8%a7%d9%84%d8%a7%d8%b5%d9%84%d9%8a%d9%8a%d9%86-2017-%d8%a7%d9%88%d9%86-%d9%84%d8%a7%d9%8a%d9%86-%d9%83%d8%a7%d9%85%d9%84/]Ш§ЩЃЩ„Ш§Щ… Ш№Ш±ШЁЩ‰ 59 Щ…ШґШ§Щ‡ШЇШ© ЩЃЩЉЩ„Щ… Ш§Щ„Ш§ШµЩ„ЩЉЩЉЩ† 2017 Ш§Щ€Щ† Щ„Ш§ЩЉЩ† ЩѓШ§Щ…Щ„ Щ…ШґШ§Щ‡ШЇШ© ЩЃЩЉЩ„Щ… Ш§Щ„Ш§ШµЩ„ЩЉЩЉЩ† 2017 Ш§Щ€Щ† Щ„Ш§ЩЉЩ† ЩѓШ§Щ…Щ„ ШЁШ¬Щ€ШЇШ© Ш№Ш§Щ„ЩЉШ© HD Щ…Ш№ Ш§Щ…ЩѓШ§Щ†ЩЉШ© ШЄШЩ…ЩЉЩ„ ЩЃЩЉЩ„Щ… ШЁШґЩѓЩ„ Щ…ШЁШ§ШґШ± Щ€ШіШ±ЩЉШ№ШЊ Щ€ШЄЩ‚Щ€Щ… Щ‚ШµШ© ЩЃЩЉЩ„Щ…[/url] .

Alesana A Place Where The Sun Is Silent Deluxe Edition 2011 320kbps CDRip MESS Reupload

Download: [url=http://monia.fr/watch738/Young-and-Hungry/season-05-episode-10-Young-and-Amnesia]Young & Amnesi season 05 episode 10[/url] .

Kerish Doctor 2016 [Isohunt.to]

Download: [url=http://mtvirtualit.com/tags/explore/plot/103766/0/0]Filmmaking58[/url] .

Isabella Madison

Download: [url=http://chaobo99.com/noticia-torrents-13124.html]MUSICALES.[/url] .

Being.Human.S05E04.PDTV.x264-Deadpool

Download: [url=http://hayvan.biz/billionaires/11495/11495.html]Mr. Lucky: Billionaire Romance Novella[/url] .

12 Маймуни Сезон 2 Епизод 4 / 12 Monkeys БГ СУБТИТРИ

Download: [url=http://palmettokennelssc.com/xem-phim-kakuriyo-no-yadomeshi-2018-14710.html](Tбєp 13/??)Kakuriyo No YadomeshiгЃ‹гЃЏг‚Љг‚€гЃ®е®їйЈЇ[/url] .

Rachmaninov plays his 2nd and 3rd Piano Concertos

Download: [url=http://flowlyrics.com/download_firefox/]Download[/url] .

Сезон 1 БГ СУБТИТРИ

Download: [url=http://otkwjneiewqdt.com/juegos-pc/?category_name=conduccion]juegos de conduccion para la PC[/url] .

Ab Mujhe Raat Din Sonu Nigam Karaoke

Download: [url=http://scottheadfishing.com/index.php?PHPSESSID=gqgcssbh7edv0bhknlmdbqm5e6&topic=131568.msg365651;topicseen]Re: SANJU.(2018).1-3.Desi.Pre.Rip.x264.AC3-DTOne.Exclusive[/url] .

Harry Potter e o Prisioneiro de Azkaban – Dublado Full HD 1080p Online

Download: [url=http://flowerdeliveriesnow.com/korean-drama/3646-let-s-hold-hands-tightly-and-watch-the-sunset/]Let’s Hold Hands Tightly and Watch The Sunset - м†ђ кј мћЎкі , м§ЂлЉ” м„ќм–‘мќ„ л°”лќјліґмћђLet’s Hold Hands Tightly and Watch The Sunset[/url] .

Dreams 1990 1080p WEB-DL Ita Jap x265-NAHOM

Download: [url=http://monia.fr/watch86/Greys-Anatomy/season-11-episode-24-Youre-My-Home]You're My Home season 11 episode 24[/url] .

bridget jones

Download: [url=http://dating-spot-is-here.com/games/pc/6741/The Sims 4 Seasons]The Sims 4 Seasons REPACK - FitGirl - 17.9 GB[/url] .

Magnet 1 8 for MAC-OSx

Download: [url=http://muzica-etno.com/movie-online/mia-a-greater-evil]M.I.A. A Greater Evil[/url] .

Addicted to Fresno 2015 iNTERNAL BDRip x264-LiBRARiANS[PRiME]

Download: [url=http://www.sallemariage.fr/milver/heute-bin-ich-blond-2013-video_d1203ae21.html]Heute bin ich blond (2013)[/url] .

Lethal Weapon

Download: [url=http://litvibe.com/manga/boku-no-hero-academia/165]Boku no Hero Academia 165[/url] .

Anthony C. Ferrante,

Download: [url=http://brletras.com/show/running-man/episode-189.html]Running Man Episode 189[/url] .

Lethal Weapon S01E07 HDTV x264-LOL[ettv]

Download: [url=http://puremusicstudio.com/advertising.html]Advertising[/url] .

One Tree Hill

Download: [url=http://pc-tools.fr/mp3/come-as-you-are.html]Come as You Are[/url] .

martin Mystere 18 e 19

Download: [url=http://www.mss118.com/milver/pod-prikritie-s04e03-video_044696fab.html]Pod prikritie S04E03 Pod prikritie S04E03[/url] .

Ava Addams Naughty Neighbors[Brazzers] HD 720P

Download: [url=http://ivalentinequotes.com/2018/02/shaadi-mein-zaroor-aana-2017-hindi-movie-180mb-hevc-hdtvrip/]Shaadi Mein Zaroor Aana 2017 Hindi Movie 180Mb hevc HDTVRip[/url] .

brie learns to obey red phoenix pdf

Download: [url=http://sejourseychelles.fr/punjab-nahi-jaungi-2017-hdtv-400mb-pakistani-urdu-480p/]Leave a Comment[/url] .

No Tomorrow S01E06 WEB-DL XviD-FUM[ettv]

Download: [url=http://bddinkal.com/mp3/as-if-it's-your-last-(마지막мІлџј).html]AS IF IT'S YOUR LAST (마지막мІлџј)[/url] .

Facebook

Download: [url=http://vicieux.fr/Fr-d-ric-Masson-Napol-on-et-l-amour_3620485.html]FrГ©dГ©ric Masson - NapolГ©on et l'amour[/url] .

Baka Go Home Cover

Download: [url=http://shreyasdhuliya.com/download/60-hertz-project-impact-soul-original-mix/]60 Hertz Project Impact Soul Original Mix[/url] .

[??/27.0GB] ????? 10??.10.Cloverfield.Lane.2016.1080p.BluRay.REMUX.AVC.DTS-HD.MA.TrueHD.7.1.Atmos-RARBG

Download: [url=http://tavada-tavuk.com/panopreter/descargar]Descargar[/url] .

??? ???

Download: [url=http://brletras.com/show/welcome-first-time-in-korea-season-2/]Welcome First Time In Korea Season 2[/url] .

Capuccetto.rosso.sangue.2011.iTALiA.MD.720p.BDRip.XviD-TrTd_CREW

Download: [url=http://shreyasdhuliya.com/download/steve-harley-cockney-rebel-sebastian/]Steve Harley Cockney Rebel Sebastian[/url] .

Recommended

Download: [url=http://vicieux.fr/Building-High-Performance-Agile-Teams_3381518.html]Building High Performance Agile Teams[/url] .

Love Everlasting 2016 HDRip AC3 2 0 x264-BDP[SN]

Download: [url=http://lifeinsurancetampa.com/series/dc-s-legends-of-tomorrow-their-time-is-now/]Watch movie[/url] .

Alan Watts 11 Good Seeded Torrents

Download: [url=http://ottqrxivzzol.com/tema/spisovatel]spisovatel[/url] .

Progressive / Power Metal

Download: [url=http://xb1123.com/english-grammar-composition-e18719339.html]English Grammar Composition[/url] .

BitLord.com

Download: [url=http://margatebookkeeping.com/chapter/tomochan_wa_onnanoko/chapter_253]Chapter 253[/url] .

[????] JTBC ??? 161117

Download: [url=http://ihealthlite.com/web_chargify/]Chargify[/url] .

Bruno Mars - Grenade

Download: [url=http://litvibe.com/manga/one-piece/610]One Piece 610[/url] .

emma green

Download: [url=http://www.bilgecocuk.biz/assassins-creed-en-streaming8/]Regarder un film[/url] .

BitLord.com

Download: [url=http://onsco.com/genres/thriller/page-2]Next в†’[/url] .

the goldbergs s04e06 720p

Download: [url=http://locationriadmaroc.fr/cliffhanger-1993-watch-online-movie-free/]Cliffhanger (1993)[/url] .

16/06/18

Download: [url=http://dating-spot-is-here.com/popular]ШґШ§Ш¦Ш№[/url] .

Life on Mars

Download: [url=http://kingsbridgedental-spanish.com/read/dl.php?p=The Lost Art of Being: Secrets to a Calm, Happy, Easy Life][Fast Download] The Lost Art of Being: Secrets to a Calm, Happy, Easy Life[/url] .

CAPTAIN AMERICA The First Avenger 2011 720p BluRay DTS x264-HiDt

Download: [url=http://nightowlstore.com/rubrique/291/linux-et-logiciels-libres]Linux et logiciels libres[/url] .

Falcon Rising 2014 HD ????? ????? 5.8

Download: [url=http://hg5500.com/film/maze-runner-the-death-cure-15927]CAM Maze Runner: The Death Cure (2018)[/url] .

Facebook

Download: [url=http://fortydiet.com/incoming-2018-bluray-1080p-wukns.html]Incoming (2018) [BluRay] (1080p) 17 РјРёРЅСѓС‚[/url] .

The Flash 2014 S03E06 HDTV x264-LOL[ettv] Torrents

Download: [url=http://steanncammunlty.com/movie/american-pie-complete-movie-series-watch-online-free-download/]American Pie Complete Movie Series Watch Online & Free Download Watch American Pie Complete Movie Series full movie online, Free Download American Pie Movie SeriesВ full Movie, American Pie Movie Series full movie download in HD, American Pie Movie Series Full Movie Online Watch Free Download HD : American Pie is a series of sex comedy films. The first film in the series was released on July 9, 1999, by Universal ...[/url] .

Dj Jaan Tomake Chai Ami Aro Kache J Han Club Mix 2011 Mp3

Download: [url=http://charterorangebeach.com/song/twice-i-want-you-back.html]TWICEгЂЊI WANT YOU BACKгЂЌMusic Video[/url] .

Advanced Search

Download: [url=http://bddinkal.com/mp3/galaxy-star.html]galaxy star,[/url] .

Microsoft Office Professional Plus 2013 SP1 November 2016 (x86x64) [Androgalaxy]

Download: [url=http://misterporn.fr/t3-3082229/Calibre-2018-720p-NF-WEB-DL-800MB-MkvCage-torrent.html]Calibre (2018) 720p NF WEB-DL 800MB - MkvCage[/url] .

[AnimeRG] Nanbaka - 07 [1080p] [Multi-Sub] [x265] [pseudo] mkv

Download: [url=http://athuexere.com/show/oppa-thinking/]Oppa Thinking[/url] .

Geekatplay Studio: Distant Trip

Download: [url=http://rangersproteamshop.com/18-kiss-kill-2017/]kiss and kill hindi dubbed full movie[/url] .

Acrowsoft DVD Ripper 1.3.0.4

Download: [url=http://dns9227.com/video/player_gen_cmedia=19441802&cfilm=21189.html]Voir la bande-annonce[/url] .

Renoir 2016 PC Cracked License

Download: [url=http://pc-tools.fr/mp3/no-mГЎs.html]no mГЎs,[/url] .

Read More

Download: [url=http://dataksa.com/forums/kitty-kats-top-models-pictures-videos.54/threads/dina-sets-1-5-2018-06-09.2577194/]Dina Sets 1-5 | 2018-06-09[/url] .

Alien Nation

Download: [url=http://youshunjieyi.com/mp3/3515699441/nelle-tue-mani.html]Nelle Tue Mani[/url] .

vlc 2 2 5-20160711-0754 beta x64

Download: [url=http://locationriadmaroc.fr/category/the-girlfriend-experience/]The Girlfriend Experience[/url] .

Zero Days 2016 READNFO 720p HDTV x264-BATV[ettv]

Download: [url=http://athuexere.com/show/my-bodyguard/]My Bodyguard[/url] .

Perl/PHP/Python

Download: [url=http://mtvirtualit.com/tags/explore/mood/102275/0/0]Sexual222[/url] .

Before I Wake Official Trailer

Download: [url=http://charterorangebeach.com/song/kahitna-rahasia-cintaku-baper--lyric.html]KAHITNA - Rahasia Cintaku #Baper [Official Lyric Video][/url] .

Dead Drop 2013 avi

Download: [url=http://exprisoner.com/18675-watch-the-lego-ninjago-movie-2017-1080p-bluray-x264-geckos-mkv.html]the.lego.ninjago.movie.2017.1080p.bluray.x264-geckos.mkv[/url] .

100% Real Swingers

Download: [url=http://fbdiffusion.fr//fbdiffusion.fr/serie/haus-des-geldes/]Haus des GeldesInfos zur Serie[/url] .

Syncovery v7 63a Build 424 (x86 x64) - Full rar

Download: [url=http://wbchsnews.com/tag/telecharger-disco-elysium-gratuit-pc/]telecharger Disco Elysium gratuit pc[/url] .

star wars rebels

Download: [url=http://ultra-turbomuscle.com/ver/the-lord-of-the-skies-6x38.html]6x38 El seГ±or de los cielos[/url] .

tender savage by phoebe conn

Download: [url=http://zippohcm.com/movie/vhs2/]HD V/H/S/2[/url] .

Сезон 2

Download: [url=http://athuexere.com/show/running-man/episode-36.html]Running Man Episode 36[/url] .

BitLord.com

Download: [url=http://zealoustools.com/voir-manga-tchoupi-et-doudou-en-streaming-1727.html]Tchoupi et Doudou[/url] .

Cyndi Lauper - Hat Full Of Stars (1993)

Download: [url=http://zealoustools.com/voir-manga-mononoke-en-streaming-1480.html]Mononoke[/url] .

Tushy 16 11 17 Karla Kush And Arya Fae XXX 1080p MP4 KTR[rarbg]

Download: [url=http://nvsamour4u.com/film/4k-ultra-hd-2160p/giochi/giochi-wii/]Giochi WII[/url] .

A Christmas Star (2015) 300mb Movie DVDRip

Download: [url=http://monia.fr/watch69/The-Big-Bang-Theory-/season-02-episode-14-The-Financial-Permeability]The Financial season 02 episode 14[/url] .

Absolute Genius Monster Builds S01E05 mp4

Download: [url=http://vicieux.fr/Pass4Sure-New-CCNA-640-802-Latest-Dumps_137397.html]Pass4Sure New CCNA 640-802, Latest Dumps[/url] .

The Flash 2014 S03E06 Shade 720p WEB-DL DD5 1 H264-RARBG-kovalski mkv

Download: [url=http://typemogul.com/daybreak/]Watch Movie[/url] .

I— I I?I? I©I I±I?I± I?I„I·I? I•I»I»I¬I?I±

Download: [url=http://woolfestreet.com/forumdisplay.php?950-Talk-Shows&s=91001e82fe7921da8ed66ddfcd523e59]Talk Shows[/url] .

PlumperPass 16 11 16 Kendra Kox Double The Pleasure XXX 1080p MP4-KTR[rarbg]

Download: [url=http://jardinpascher.fr/d/dphn-176-mega.html]dphn 176[/url] .

MEYD-202 mp4

Download: [url=http://flowerdeliveriesnow.com/chinese-drama/3918-fire-of-eternal-love-cantonese/]Fire of Eternal Love (Cantonese) - 烈火如жЊFire of Eternal Love (Cantonese)[/url] .

Read More

Download: [url=http://doktermana.com/deus-da-guerra-2018-hd-bluray-720p-e-1080p-dublado-dual-audio/]Deus da Guerra (2018) – HD BluRay 720p e 1080p Dublado / Dual Áudio[/url] .

Angels And

Download: [url=http://brletras.com/show/knowing-brother/episode-121.html]Knowing Brother Episode 121[/url] .

public disgrace

Download: [url=http://www.mss118.com/milver/the-vampire-diaries-s04e07-video_51a747d90.html]The Vampire Diaries S04E07[/url] .

Jay Hernandez

Download: [url=http://dating-spot-is-here.com/games/pc]Ш§Щ„Ш№Ш§ШЁ PC[/url] .

100 000 Анекдотов.pdf

Download: [url=http://solitabre.com/?p=films&search=Amanda Joy]Amanda Joy[/url] .

view all

Download: [url=http://shuziyouhua.com/dirt-showdown-telecharger-gratuit-de-pc-et-torrent]DiRT Showdown telecharger gratuit de PC et Torrent[/url] .

Hope Howell - Babysitters Taking On Black Cock 4 rq.mp4

Download: [url=http://zealoustools.com/voir-manga-oruchuban-ebichu-en-streaming-823.html]Oruchuban Ebichu[/url] .

WWE NXT 2016 11 16 720p WEB h264-HEEL [TJET]

Download: [url=http://nightowlstore.com/rubrique/137/politique]Politique[/url] .

Alc Strumentals - Alchemist - Fav Trkz 2

Download: [url=http://executivegroupes.com/preacher-3a-temporada-2018-hd-720p-e-1080p-dublado-legendado/]Preacher 3ª Temporada (2018) – HD 720p e 1080p Dublado / Legendado[/url] .

beatrice

Download: [url=http://jodietstest7141imbriglio.com/watch/the-things-weve-seen-2017.html]The Things We've Seen Duration: 80 min[/url] .

Most Downloaded

Download: [url=http://clevelandwebsitehost.com/transporter-3-2008.html]Voir l'article[/url] .

big brother

Download: [url=http://athuexere.com/show/running-man/episode-196.html]Running Man Episode 196[/url] .

E-40 - The D-Boy Diary Book 1 -2016-

Download: [url=http://testdomainreg-08244.com/film/Ali-s-Wedding_hTm]Ali's Wedding[/url] .

Felipa Lins - Ready To Bang (February 10th, 2016) (TrannySurprise)

Download: [url=http://mortalarcade.com/chapter/tales_of_demons_and_gods/chapter_125.5]Chapter 125.5[/url] .

TapinRadio Pro 2.02 (x86/x64) Multilingual Portable

Download: [url=http://netprawnicy.pl/bleeding-steel-2018-english-movie-720p-bluray-1gb-350mb-download/]Bleeding Steel (2018) English Movie 720p BluRay 1GB 350MB Download[/url] .

Chat IRC SSL

Download: [url=http://nvsamour4u.com/2018/05/24/]24-05-2018, 21:31[/url] .

Download Supernatural 12? Temporada Legendado Torrent

Download: [url=http://chaobo99.com/descargar-torrents-variados-1399-Android-para-Windows-Emulador.html]Android para Windows Emulador.[/url] .

doctor who

Download: [url=http://netprawnicy.pl/intelligent-police-2018-telugu-full-movie-720p-web-hd-1gb-350mb-esub/]Intelligent Police (2018) Telugu Full Movie 720p WEB-HD 1GB 350MB ESub[/url] .

rentre littraire 2016

Download: [url=http://doktermana.com/marvels-runaways-1a-temporada-completa-2017-hd-720p-e-1080p/]Marvels – Runaways 1ВЄ Temporada Completa (2017) – HD 720p e 1080p Dublado / Legendado[/url] .

Sausage Party (2016)

Download: [url=http://zealoustools.com/voir-manga-slayers-evolution-r-en-streaming-2305.html]Slayers evolution-r[/url] .

Coolutils Total Excel Converter 5 1 222 + Serial Key zip

Download: [url=http://dataksa.com/forums/vintage-porn.83/]Vintage Porn[/url] .

Joni Mitchell - Refuge of the Roads (2004) [DVD5 NTSC] [Isohunt.to]

Download: [url=http://tavada-tavuk.com/winmodules/descargar]Descargar[/url] .

Modern Marvels

Download: [url=http://jubaowuliu.com/b/ref=sd_allcat_gno_vas_cleaning/258-4398363-9381640?ie=UTF8&node=14069263031]Cleaning[/url] .

Alcohol 120% 2.0.3 Build 9326 Retail + Crack - Core-X

Download: [url=http://lifeinsurancetampa.com/series/bob-s-burgers/]Watch movie[/url] .

Mughal E Azam 1960 HQ 1080p BluRay x264 AC3-ETRG

Download: [url=http://vandyhacks.com/online-filmek/59-a-keresztapa]A Keresztapa[/url] .

La La Land

Download: [url=http://hayvan.biz/romance/13170/13170.html]Devastate (Deliver #4) by Pam Godwin[/url] .

Alban Berg Quartett - Teldec Recordings 8CD

Download: [url=http://socriptomoedas.com/watch-online/messenger-of-wrath]Messenger of Wrath[/url] .

La Famille Belier streaming

Download: [url=http://ashetee.com/21cineplex-coming-soon]Coming Soon[/url] .

[AnimeRG] Keijo - 7 [720p] [Multi Subbed] mkv

Download: [url=http://steanncammunlty.com/movie/carry-jatta-2-2018-punjabi-full-movie-watch-online/]Carry on Jatta 2 (2018) Punjabi Full Movie Watch Online Watch Flight 666 (2018) Full Movie Online, Free Download Flight 666 (2018) Full Movie, Flight 666 (2018) Full Movie Download in HD Mp4 Mobile Movie'Carry On Jatta' is an Punjabi romantic comedy starring Gippy Grewal and Mahie Gill in the leading roles. It revolves around the confusing and comic events that arise after Jass lies to Mahie about his family ...[/url] .

Suicide Squad 2016 EXTENDED 1080p WEBRip 1 9 GB - iExTV

Download: [url=http://testdomainreg-08244.com/film/Auto-Focus_idQ]Auto Focus[/url] .

Michael Franks - Sleeping Gypsy 1977 (Japan SHM-CD) FLAC

Download: [url=http://mbc787.com/screenshot/descargar]Descargar[/url] .

Dublado

Download: [url=http://woolfestreet.com/forumdisplay.php?2276-Mubarak-Ho-Beti-Hui-Hai&s=f970a21e442858c28cb47b5bb7909a32]Mubarak Ho Beti Hui Hai[/url] .

Certain Women (2016)

Download: [url=http://zippohcm.com/movie/paw-patrol-summer-rescues/]SD Paw Patrol Summer Rescues[/url] .

WIRED - December 2016 - True PDF - 1812 [ECLiPSE]

Download: [url=http://monia.fr/watch69/The-Big-Bang-Theory-/season-05-episode-18-The-Werewolf-Transformation]The Werewolf T season 05 episode 18[/url] .

Alain Souchon 2010 Est Chanteur

Download: [url=http://fortydiet.com/movie/чёрная-пантера-trc.html]5 K Чёрная Пантера 2018[/url] .

SexArt.com_16.10.29.Etna.Tanco.XXX.IMAGESET-GAGBALL[rarbg]

Download: [url=http://250585g.com/jeux/jeu-809937/]Call of Duty : Black Ops IIII[/url] .

@file gomorra

Download: [url=http://latamdisco.com/red-dog-true-blue-2017-french-hdrip-x264.html]Red Dog True Blue 2017 FRENCH HDRiP x264[/url] .

Jack Reacher: Never Go Back (2016)

Download: [url=http://paragonfunnel.com/keyword/the-catcher-was-a-spy-stream-hd]#The Catcher Was a Spy stream hd[/url] .

[OCN] ??? ?.E05~E06.161115-161117.HDTV.H264.720p-U…

Download: [url=http://monia.fr/watch60/Downton-Abbey/season-05-episode-04-Episode-4]Episode 4 season 05 episode 04[/url] .

sailor moon

Download: [url=http://typemogul.com/engine/go.php?movie=Pinoy Movies Archives - Full Movies Online On Putlocker]Openload Movies[/url] .

Tom Hanks

Download: [url=http://siennaryan.com/profile/samcode4u/]samcode4u[/url] .

Progressive

Download: [url=http://monia.fr/watch58/DOCTOR-WHO/season-06-episode-02-Day-of-the-Moon]Day of the Moo season 06 episode 02[/url] .

En lire plus

Download: [url=http://litvibe.com/manga/one-piece/384]One Piece 384[/url] .

Powerpoint For Mac 2016 Power Shortcuts

Download: [url=http://trn1023.com/movie/craig-of-the-creek-season-1-24408.html]Eps15 Craig of the Creek - Season 1[/url] .

Alien Isolation [Steam-Rip]

Download: [url=http://sharpsushi.com/249194-star-wars-gli-ultimi-jedi-2017-bluray-1080p-avc-ita-dd-71-eng-dts-hd-71-ddncrew.html]Grazie : 184[/url] .

Strange Hill High

Download: [url=http://train4entrepreneur.com/g/gerber+accumark+v8+5+0+89+patch+mediafire.html]gerber accumark v8 5 0 89 patch[/url] .

Microsoft Office 2016 Replacement file

Download: [url=http://ihealthlite.com/it/download_speccy/]Speccy 1.32.740[/url] .

The Flash 2014 S02E23 The Race of His Life1080p WEB-DL DD5 1 H265-LGC mkv

Download: [url=http://www.fonbet-ed906.com/ver-peliculas/primeropeliculas]PrimeroPeliculas[/url] .

The Walking Dead 7? Temporada Serie Torrnet

Download: [url=http://woolfestreet.com/forumdisplay.php?1886-Dafa-420&s=f970a21e442858c28cb47b5bb7909a32]Dafa 420[/url] .

Блаженный Августин. Исповедь / Confessiones

Download: [url=http://monia.fr/watch86/Greys-Anatomy/season-05-episode-20-Sweet-Surrender]Sweet Surrende season 05 episode 20[/url] .

Analog Circuit Design: Volt Electronics Mixed-Mode Systems Low-Noise and RF Power Amplifiers for Telecommunication

Download: [url=http://litvibe.com/manga/shokugeki-no-soma/56]Shokugeki no Soma 56[/url] .

Watching TV Online - Watch TV shows pdf

Download: [url=http://rawzip.com/ver/i-remember-you-1x16.html]1x16 Te Recuerdo[/url] .

Ver A Historia Real de um Assassino Falso Online

Download: [url=http://dns9227.com/series/ficheserie-7330/saison-29872/ep-610381/]S08E15 - Worth[/url] .

le meilleur patissier

Download: [url=http://rangersproteamshop.com/into-the-badlands-s02e1-hindi/]into the badlands season 2 episode 1 download in hindi[/url] .

MasterChef Сезон 2 Епизод 37 24.05.2016

Download: [url=http://mbc787.com/expresso/]Expresso[/url] .

Chris Pine,

Download: [url=http://lifeinsurancetampa.com/movie/the-greatest-showman/]Watch movie[/url] .

torrent search

Download: [url=http://testdomainreg-08244.com/film/Call-Me-King_iey]Call Me King[/url] .

Kensington - Control (2016) FLAC [Isohunt.to]

Download: [url=http://mortalarcade.com/chapter/tomochan_wa_onnanoko/chapter_103]Chapter 103[/url] .

NetDrive 2 6 11 Build 919 Multilingual + Key [4realtorrentz] zip

Download: [url=http://youshunjieyi.com/page/top-videos.html] Top Videos[/url] .

[pdf] Altroconsumo-Ottobre 2016-

Download: [url=http://zealoustools.com/voir-manga-clamp-school-detective-en-streaming-1118.html]Clamp School dГ©tective[/url] .

[share_ebook] The Elephant in the Room: Silence and Denial in Everyday Life free ebook download

Download: [url=http://doktermana.com/hampstead-nunca-e-tarde-para-amar-2018-bluray-720p-e-1080p-dublado-dual-audio/]Hampstead – Nunca é Tarde para Amar (2018) – BluRay 720p e 1080p Dublado / Dual Áudio[/url] .

Continuar

Download: [url=http://wholesale-onesies.com/X12X69X230323X0X0X1X-marathon-doctor-who.html]Marathon Doctor Who[/url] .

Robin Hood

Download: [url=http://trn1023.com/movie/jurassic-world-fallen-kingdom-25222.html]CAM Jurassic World: Fallen Kingdom[/url] .

Empyrion Galactic Survival Alpha 4.3.1 x64 crack ALI213 #Kortal [Isohunt.to]

Download: [url=http://kingsbridgedental-spanish.com/Making-Things-Talk--Using-Sensors--Networks--and-Arduino-to-see--hear--and-feel-your-world_312753.html]Making Things Talk: Using Sensors, Networks, and Arduino to see, hear, and feel your world[/url] .

Fort Tilden 2014 RERiP BDRip x264-WiDE[1337x][SN]

Download: [url=http://muzica-etno.com/movie-online/castle-season-5]Castle - Season 5[/url] .

Tyler Labine

Download: [url=http://alicialugorealestate.com/03victoria-junes-insatiable-craving-cock/]39 mins ago Victoria June’s Insatiable Craving for Cock[/url] .

Bruno Mars - 24K Magic

Download: [url=http://fbdiffusion.fr/film/tatara-samurai-2016/]たたら侍[/url] .

Code Black S02E07 HDTV x264-FLEET[PRiME]

Download: [url=http://ihealthlite.com/web_bamboohr/]BambooHR[/url] .

X Angels Stasya Stoune Teens play crazy sex games mp4

Download: [url=http://shuziyouhua.com/fortnite-telecharger-gratuit-de-pc-et-torrent/]Fortnite telecharger gratuit de PC et Torrent[/url] .

Сезон 2 БГ СУБТИТРИ

Download: [url=http://socriptomoedas.com/watch-online/bombshell-the-hedy-lamarr-story]Bombshell: The Hedy Lamarr Story[/url] .

Little Women Dallas S01E03 Trading Spaces HDTV x264-[NY2] - [SRIGGA]

Download: [url=http://hayvan.biz/best_series/3263/3263.html]Percy Jackson and the Olympians series[/url] .

insaisissable

Download: [url=http://thesonofficial.com/film/mathilde-46576]HD Mathilde (2017)[/url] .

Chinatown 1974 (1080p Bluray x265 HEVC 10bit AAC 5 1 Tigole)

Download: [url=http://black-cat-artifices.fr?post=7007117]Mary.Magdalene.2018.720p.BluRay.x264-GUACAMOLE[/url] .

Demi Lovato Warrior Karaoke Instrumental

Download: [url=http://muzica-etno.com/movie-online/48-christmas-wishes]48 Christmas Wishes[/url] .

100th Running of the Indianapolis 500 May 29th 2016 [WWRG]

Download: [url=http://vicieux.fr/Pluralsight-Using-Functoids-in-the-BizTalk-2013-Mapper_2699460.html]Pluralsight - Using Functoids in the BizTalk 2013 Mapper[/url] .

Dublado

Download: [url=http://athuexere.com/show/weekly-idol/episode-360.html]Weekly Idol Episode 360[/url] .

TechSmith Camtasia Studio 9 0 0 Build 1306 + Serial Keys [SadeemPC]

Download: [url=http://monia.fr/watch86/Greys-Anatomy/season-11-episode-08-Risk]Risk season 11 episode 08[/url] .

The Crazy Ones

Download: [url=http://ultra-turbomuscle.com/ver/scarlet-heart-ryeo-1x19.html]1x19 Dalui Yeonin - Bobogyungsim Ryeo[/url] .

JUFD-132 ????????? ????

Download: [url=http://zaeinab.com/series/candice-renoir/3268]Candice Renoir HDTV[/url] .

Rectify S04E04 HDTV x264-FLEET[PRiME]

Download: [url=http://executivegroupes.com/levantando-poeira-2018-hd-web-dl-720p-e-1080p-dublado-dual-audio/]Levantando Poeira (2018) – HD WEB-DL 720p e 1080p Dublado / Dual Áudio[/url] .

BitLord.com

Download: [url=http://paragonfunnel.com/movie/alaipayuthey-2000-torrents]Hot Alaipayuthey (2000)[/url] .

Chicas Con Experiencia s1 mp4

Download: [url=http://zaeinab.com/pelicula/407-dark-flight/]407 Dark Flight BLuRayRip[/url] .

Frank Sinatra Greatest Hits Frank Sinatra Top 30 Songs

Download: [url=http://mccarthydohar.com/tag/nora-roberts/]Nora Roberts[/url] .

DS.Catia.V5-6R2014.SP1.UPDATE.WINDOWS-SSQ

Download: [url=http://cryptonamely.com/films/nes-en-chine.html]NГ©s en Chine[/url] .

Ultra Music Festival Miami 3 27 2015

Download: [url=http://hot-gaychat.com/pelicula/el-destino-de-jupiter/]El Destino De Jupiter[/url] .

Сезон 2 БГ СУБТИТРИ

Download: [url=http://athuexere.com/show/the-wonders-of-korea/]The Wonders Of Korea[/url] .

Read More »

Download: [url=http://tavada-tavuk.com/mydefrag/]MyDefrag (JkDefrag)[/url] .

11.22.63 Епизод 8 БГ АУДИО

Download: [url=http://netprawnicy.pl/kirrak-party-2018-telugu-movies-720p-webhd-1-2gb-350mb-mkv/]Kirrak Party (2018) Telugu Movies 720p WEBHD 1.2GB 350MB MKV[/url] .

CSI: New York

Download: [url=http://certifiedenglish.com/category/netsuzou-trap-ntr]Netsuzou TRap – NTR[/url] .

Dog Sled Saga - 2016 (MacAPPS)

Download: [url=http://scottheadfishing.com/index.php?PHPSESSID=gqgcssbh7edv0bhknlmdbqm5e6&topic=57007.0]RDP's And VPS Servers At Greatest Discounts 365 Days.[/url] .

Teenage Mutant Ninja Turtles 2 (2016)

Download: [url=http://mapevaders.com/tv/genius-1i40/2x1.html]Picasso: Chapter One[/url] .

Dictionnaire des expressions juridiques, 2 ed.

Download: [url=http://nightowlstore.com/rubrique/507/united-states]United States[/url] .

Waiting In Your Welfare Line Buck Owens

Download: [url=http://zaeinab.com/series-hd/padre-brown/3870]Padre Brown HDTV 720p AC3 5.1[/url] .

view all

Download: [url=http://palmettokennelssc.com/xem-phim-bi-kip-luyen-rong-phan-3-huong-toi-tram-rong-dragons-defenders-of-berk-season-3-2015-12544.html]BГ KГp Luyện Rб»“ng Phбє§n 3: HЖ°б»›ng Tб»›i TrбєЎm Rб»“ng Dragons Defenders Of Berk Season 3 (2015) (Tбєp 39/39)[/url] .

KarupsHA 16 11 17 Raven Orion Hardcore XXX 720p MP4-KTR[rarbg]

Download: [url=http://trn1023.com/movie/serialized-24630.html]SD Serialized[/url] .

Comments (0)

Download: [url=http://hot-gaychat.com/series/the-resident/3771]The Resident HDTV[/url] .

Photo Restoration in Photoshop - Bring Old Photos Back to Life

Download: [url=http://kartumimpi.com/series-vo/the-frankenstein-chronicles/2314]The Frankenstein Chronicles HDTV 720p AC3 5.1[/url] .

Doomsday Revival - Doomsday Revival

Download: [url=http://jebriwsbwpvzrw.com/films/telecharger/96761-game-night.html]Game Night TELECHARGEMENT GRATUIT[/url] .

Fight Song - Rachel Platten

Download: [url=http://tipbettr.com/apk/2729/]Download APK[/url] .

Storks (2016)

Download: [url=http://cryptoreaper.com/fast-and-furious-8/]Fast and Furious 8[/url] .

Hope Howell - Babysitters Taking On Black Cock 4 rq mp4

Download: [url=http://cordsets.biz/movies/documentary/6732/Year Million]Year Million E03 (240p, 360p, 480p, 720p, 1080p)[/url] .

Batang Bata Pa At Bakla Na Part 3

Download: [url=http://fonbet-37e14.com/films/dapres-une-histoire-vraie]D'aprГЁs une Histoire Vraie[/url] .

View this forum's RSS feed

Download: [url=http://bta-andora.com/forums/kitty-kats-top-models-pictures-videos.54/threads/kk-model-ivanka-video-1-18-2018-06-08.2542123/]KK-Model Ivanka Video 1-18| 2018-06-08[/url] .

Download Thousands of Books two weeks for FREE!

Download: [url=http://dancefestopen.com/descargar-torrents-variados-1400-CorelCAD-20140-Build-13812.html]CorelCAD 2014.0 Build 13.8.12.[/url] .

Nandas.Island.Multi5.nds.by.chuska.www.cantabriatorrent.net

Download: [url=http://cookingyoursassoff.com/serie.php?serie=3297]El hombre de tu vida[/url] .

Cafe Society Dublado 720p Download

Download: [url=http://bestpartialrepairs.com/films/annee/2011.html]film de 2011[/url] .

[Best of Teen Anals] Devils Film DVDRip [.avi]

Download: [url=http://comender.com/film/15025/ http://comender.com/tag/%D8%A7%D9%81%D9%84%D8%A7%D9%85+%D8%A7%D9%83%D8%B4%D9%86+2018/1.html ]Ш§ЩЃЩ„Ш§Щ… Ш§ЩѓШґЩ† 2018[/url] .

Adobe Dreamweaver CC 2015 + Crack [Re-Upload] / Mac OSX

Download: [url=http://jebriwsbwpvzrw.com/telecharger/westworld/]Westworld[/url] .

Adobe Acrobat PRO DC 2015 [ Mac Os X ] [ UPGRADABLE ] [ FirstKick ] [ nFa ]

Download: [url=http://mersinhavacilik.com/t/girlboss-hashtag]#girlboss[/url] .

Huset2016

Download: [url=http://alpha-iptv.com/category/histoire/]Histoire[/url] .

WPS Office 2016 Premium v10 1 0 5802 Multilingual Incl Patch

Download: [url=http://canariaschat.com/pelicula/the-lucky-one/]The Lucky One DVDRIP[/url] .

Titanic 1997 HD ????? ????? 7.7

Download: [url=http://easyfinancial.biz/series-hd/the-looming-tower/3708]The Looming Tower HDTV 720p AC3 5.1[/url] .

Jason Bourne 2016 1080p BluRay x264-SPARKS[PRiME]

Download: [url=http://uusintaripsista.com/tag/pelicula-race-3-2018-descarga-completa/]pelГcula Race 3 (2018) descarga completa[/url] .

Mister You - Le Grand Mechant You-WEB-FR-2016-SPAN...(N)

Download: [url=http://franksfishworld.com/movies/the-demoniacs/]The Demoniacs[/url] .

Storm Surfers 3D 2012 HD ????? ????? 6.5

Download: [url=http://jartiyeri.com/pelicula/star-wars-vii-3d-hou/]Star Wars VII 3D HOU BluRay 3D 1080p[/url] .

[AnimeRG] FLIP FLAPPERS - 7 [720p] [ReleaseBitch]

Download: [url=http://appliancedoctortv.com/tags/American+Assassin+guarda+streaming/]American Assassin guarda streaming[/url] .

person of interest S02

Download: [url=http://moviesdickens.com/shooter-season-3-episode-12-s03e12_421685]Season 3 Episode 12 Patron Saint[/url] .

designing high-performance jobs

Download: [url=http://celebinstagrams.com/album/torrent-212660-tnt-discography-30-releases-1982-2018]TNT - Discography (30 Releases) (1982-2018)[/url] .

Cyberwar S01E11 HDTV x264-W4F - [SRIGGA]

Download: [url=http://bestpartialrepairs.com/films/acteur/geoffrey-rush.html]Geoffrey Rush[/url] .

Dragonball Z: Resurrection F komplett online sehen

Download: [url=http://freelifetimexxxbook.com/scroll-reverser-download-invertire-scroll-mouse-mac/]Continua...[/url] .

Alchemy And The 3 Great Works

Download: [url=http://cookingyoursassoff.com/serie.php?serie=2252]La guerra de Hollywood[/url] .

(??????) [????] ???????? [2014-01-15] (?????).zip

Download: [url=http://crazy-for-books.com/Our-Girl]Our Girl Returns 5th Jun 9:00pm On BBC one NEW![/url] .

Prison Break

Download: [url=http://cookingyoursassoff.com/serie.php?serie=4486]Fangbone![/url] .

BitLord.com

Download: [url=http://yuwioo.com/film/the-first-purge-2018/]The First Purge[/url] .

Stacy Schiff - The Witches Salem, 1692 (PDFamp;EPUBamp;MOBI)

Download: [url=http://mypremiumgarciniacambogiaplus.com/diziler/chicago-typewriter/]Chicago Typewriter[/url] .

Aaj Subah Jab Main Jaga Vidio Song

Download: [url=http://djangolor.com/magazine/678-creattiva-con-perline-no-5-2009.html]Creattiva con Perline No 5 2009[/url] .

Blindspot S02E09 HDTV x264-LOL mp4

Download: [url=http://teverie.com/torrent/technitium-mac-address-changer_37114.html]Technitium MAC Address Changer[/url] .

Jason Bourne 2016 720p BluRay x264-SPARKS[PRiME]

Download: [url=http://bojieba.com/category/Shopping]Shopping[/url] .

Read More

Download: [url=http://bottlecapemblem.com/book/hidden-by-kelli-clare]Hidden by Kelli Clare[/url] .

BitLord.com

Download: [url=http://ghqcars.com/ver/the-flash-2014-4x22.html]4x22 The Flash[/url] .

Cinesamples African Marimba and Udu-[KONTAKT]

Download: [url=http://uothfsuwjhhbp.com/torrent/70682/readyplayerone20181080pwebh264-webtiful.html]Ready.Player.One.2018.1080p.WEB.h264-WEBTiFUL[/url] .

1920 London (2016) Full Movie download 700mb Free Hd

Download: [url=http://trycambogiapure.com/list/music-making-apps-to-strike-a-chord/]Music-making Apps to Strike a Chord[/url] .

Continuar

Download: [url=http://dokkijoa.com/manga/one-piece/338]One Piece 338[/url] .

Call the Midwife Christmas Special 2015 720p BluRay x264-PHASE[EtHD]

Download: [url=http://kingstiresms.com/actors/Eli+Roth.html]Eli Roth[/url] .

Clinique

Download: [url=http://earthdayimages2018.com/engine/go.php?file=paramore-caught-in-the-middle.html]Paramore: Caught In The Middle.mp3[/url] .

Jarven tarina (2016)

Download: [url=http://karchercenterpremium.com/top-del-mese/anime-e-cartoon/anime-hd/]Anime HD[/url] .

WWE - Wrestling

Download: [url=http://1st-saftey.com/forumdisplay.php?f=233&s=cb2533878f12506cd43e16d074aab7b8]Aventura[/url] .

game of thrones s04e07

Download: [url=http://djangolor.com/magazine/1015452-time-usa-april-09-2018.html]Read More ...[/url] .

Bloodlust Tournament of Death 2016 DOCU WEBRiP x264-RAiNDEER[1337x][SN]

Download: [url=http://trycambogiapure.com/games/kr.co.smartstudy.babysharkjump.googlemarket/]Baby Shark Adventure APK 5 - Free Arcade Game for Android[/url] .

Advanced search

Download: [url=http://dokkijoa.com/manga/fairy-tail/217]Fairy Tail 217[/url] .

Alanis Morissette-Jagged Little Pill Acoustic-2005-RNS

Download: [url=http://dancefestopen.com/descargar-torrents-variados-1941-Destroy-Windows-10-Spying-v15430.html]Destroy Windows 10 Spying v1.5.430.[/url] .

Advanced Uninstaller PRO 12.16

Download: [url=http://d7bo.com/The-Bold-Type]The Bold Type Returns 12th Jun 9:00pm On Freeform NEW![/url] .

Vellaiya Irukiravan Poi Solla Maatan (2015) DVDRip

Download: [url=http://usatopnewsfeed.com/movies/watch-online-gayatri-telugu-2018-full-movie-download.html]Gayatri Telugu (2018) (HDRip)[/url] .

AI RoboForm

Download: [url=http://cookingyoursassoff.com/serie.php?serie=297]La hora once[/url] .

Petes Dragon 2016 BDRip x264 AC3-iFT[SN]

Download: [url=http://wwwhawaiianofficiant.com/cast/mickey-bollenberg/]Mickey Bollenberg[/url] .

Day Break

Download: [url=http://cookingyoursassoff.com/capitulo.php?serie=2894&temp=2&cap=12]Colony 2X12 - Capitulo 12[/url] .

Little Women LA S05E19 Reunion Part Two HDTV x264-[NY2] - [SRIGGA]

Download: [url=http://uusintaripsista.com/tag/peliculas-gratis-completa/]peliculas gratis completa[/url] .

Mistresses US

Download: [url=http://forthetoys.com/movies-corner-gas-animated-season-1-2018-moviesub.html]Corner Gas Animated Season 1 Eps12 HD[/url] .

view all

Download: [url=http://bursakesikelyaf.com/whats-with-wheat-streaming-vf-vostfr/]WhatS With Wheat?[/url] .

TWD S07E12 Torrent

Download: [url=http://yourlocaldates4.com/engine/go.php?file2=++Lean+on+Pete+2017+1080p+BluRay+x264-DRONES+]Online Subtitles[/url] .

Good Behavior S01E02 WEB-DL XviD-FUM[ettv]

Download: [url=http://alpha-iptv.com/movie/la-douleur-dvd-streaming/]La douleur streaming[/url] .

Software Engineering and Comp...

Download: [url=http://inikas.biz/2017/la-memoire-assassine.html]La MГ©moire assassine[/url] .

Saheb Bibi Golaam (2016)

Download: [url=http://0864882.com/movie-online/the-adventures-of-rocky-and-bullwinkle-season-1]The Adventures of Rocky and Bullwinkle - Season 1[/url] .

Bull (2016)

Download: [url=http://theflashupdate.com/recherche/?query=Orange+is+The+New+Black]Orange is The New Black[/url] .

Albom Classicheskoy Musyki - Relax

Download: [url=http://dancefestopen.com/descargar-torrents-variados-423-AVS-Video-Editor-v61.html]AVS Video Editor v6.1[/url] .

American Horror Story S06E10 HDTV x264-FUM[ettv]

Download: [url=http://locationmaisonbourges.fr/1215-serena-en-streaming.html]Serena en Streaming[/url] .

NW (2016)

Download: [url=http://massasjenorskdamer.com/watch-movie-disobedience-online-megashare-9321]Disobedience (2018)[/url] .

Key Asc 10 Pro